AI Strategy

Dec 8, 2025

The Hidden Cost of AI Adoption: AI Technical Debt

Ramon Ignacio

AI Measurement Account Executive

Everyone's racing to adopt AI. Marketing signed up for ChatGPT last quarter. Engineering discovered Claude. Someone in finance keeps talking about "this amazing tool" they found on Reddit. Innovation is happening everywhere.

So is complete disorder and confusion. And honestly? It's creating a mess we'll be cleaning up for years.

What Is AI Technical Debt?

If you've spent time in software development, you know technical debt: the future costs that pile up when teams take shortcuts today. AI technical debt takes this concept and cranks it up to eleven. It includes the tangled dependencies around data, models, operations, and (increasingly) the explosion of AI tools flooding every corner of the enterprise.

Eric Johnson, CIO of PagerDuty, states “Companies rushing to build custom AI solutions today risk creating new forms of technical debt that could prove more costly and complex to unwind than the architectural challenges we’ve faced in the past. (CIO.com March 25, 2025)

With AI seeping into every business function, all technical debt is becoming AI technical debt. We just haven't admitted it yet.

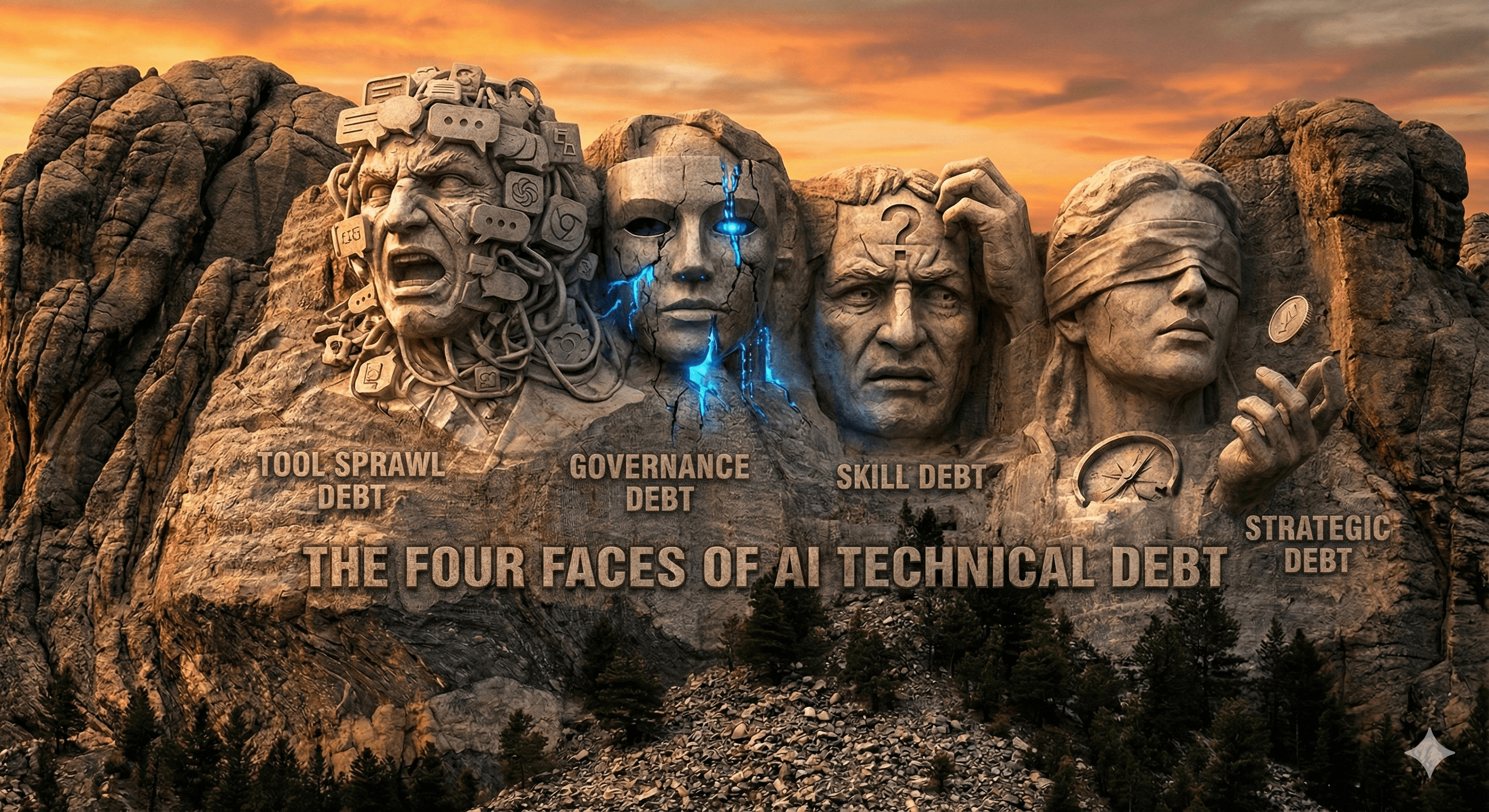

The Four Faces of AI Technical Debt

AI technical debt doesn't send you a calendar invite. It accumulates quietly across four dimensions while everyone's distracted by the shiny new chatbot, image generator, etc.

1. Tool Sprawl Debt

When teams adopt AI tools without talking to each other, you end up with overlapping capabilities, fragmented workflows, and redundant licenses. Three departments paying for three different summarization tools? That's not innovation. That's a procurement and CFO nightmare.

2. Governance Debt

Shadow AI (tools adopted outside IT oversight) creates compliance gaps and data vulnerabilities. Every unapproved tool represents a potential data leak waiting to happen. And by the time you discover the exposure, everyone's already built workflows around it.

3. Skill Debt

Without visibility into how teams actually use AI, training investments become expensive guesswork. You can't improve what you can't measure. Poor prompt engineering, inefficient workflows, and underutilized features compound into productivity losses that nobody can quite explain.

4. Strategic Debt

When you can't measure AI's impact, every investment becomes a coin flip. You keep funding tools that aren't delivering. You underinvest in what's working. And when the board asks about ROI, you discover that "vibes-based analytics" isn't a compelling answer.

Why Managing AI Technical Debt Matters Now

The stakes look different depending on where you sit in the org chart:

For CIOs:

Shadow AI creates data exposure vulnerabilities that your existing security stack simply can't see coming

Unmanaged tool sprawl compounds integration costs and builds brittle architectures that slow down every future project (and frustrate your best engineers)

For CFOs:

Teams expense personal AI subscriptions or use unapproved enterprise licenses, creating budget leakage that hides in plain sight

Without usage and impact data, you can't determine whether AI investments deliver their promised productivity gains, or if everyone's just using ChatGPT to write birthday messages

For CEOs:

When departments adopt AI in silos, you lose the ability to leverage organization-wide learnings and competitive advantages

As AI regulations tighten globally, undocumented usage becomes a liability that threatens reputation and market access (regulators have zero sense of humor about this)

Why Traditional Approaches Fall Short

Most organizations try to manage AI adoption through policy alone. Approved tool lists. Usage guidelines. Training mandates. The well-meaning memo from IT.

The problem? Policy without measurement is just hope with a company logo on it.

You can declare that teams should only use approved AI tools. But without visibility, you have no idea if they're complying. Or if the approved tools even meet their needs. Or if Dave in accounting has been running the entire Q3 forecast through a free-tier AI he found on Product Hunt.

The Measurement-First Approach

At Larridin, we believe the antidote to AI technical debt is visibility. Not “big-brother” type surveillance. Visibility.

Our platform, Scout, provides the intelligence layer that transforms AI experimentation into measurable, compliant, and reportable business performance. Here's what that looks like in practice:

AI Usage Telemetry: Scout detects interactions with hundreds of AI applications across your organization. You see what tools people use, who uses them, and how frequently. No more blind spots. No more "wait, we have HOW many Copilot licenses?"

License Intelligence: Scout distinguishes between personal accounts and enterprise licenses. You identify compliance gaps before they become audit findings and optimize spend based on actual usage patterns.

Proficiency Measurement: Scout doesn't just track whether people use AI. It measures how effectively they use it. Our use-case classification identifies whether teams leverage AI for research, writing, analysis, coding, etc., and how proficiently they do each.

Configurable Governance: You set policies that warn, block, or redirect users to approved alternatives. You enforce responsible adoption before bad patterns calcify into technical debt.

Impact Scoring: Our three-signal model (Utilization × Proficiency × Impact) gives leadership the ROI data they need to make informed AI investment decisions. Real numbers. Not vibes.

Privacy by Design

Scout's zero-data-retention (ZDR) architecture means we never capture prompts, outputs, or PII. We measure the patterns of AI usage, not the content. Your employees' conversations with AI stay private. We just help you understand the shape of what's happening.

This approach also means Scout itself doesn't create new compliance debt. For regulated industries, that distinction matters a lot.

The Bottom Line

AI technical debt becomes inevitable when you can't see what's happening. Every unmeasured AI decision represents a potential liability: a compliance gap, a wasted license, a missed training opportunity, a strategic misallocation.

Scout doesn't fix technical debt after it piles up. It provides the visibility and governance infrastructure that identifies it in the first place, but also prevents organizations from making the blind decisions that create debt to begin with.

Without measurement, every AI decision is a bet. Scout turns bets into informed investments.

And honestly, we could all use fewer bets right now.

A version of this post was originally published on LinkedIn